Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Bitca provides a beautiful Human UI for interacting with your humans.

Let’s take it for a spin, create a file playground.py

playground.py

Authenticate with bitca by running the following command:

bitca author by exporting the BITCA_API_KEY for your workspace from bitca.app

MacWindows

Install dependencies and run the Human Ambient:

Open the link provided or navigate to http://projectbit.ca/ambient (login required)

Select the localhost:7777 endpoint and start chatting with your humans!

The Human Ambient includes a few demo humans that you can test with. If you have recommendations for other humans we should build, please let us know in the community forum.

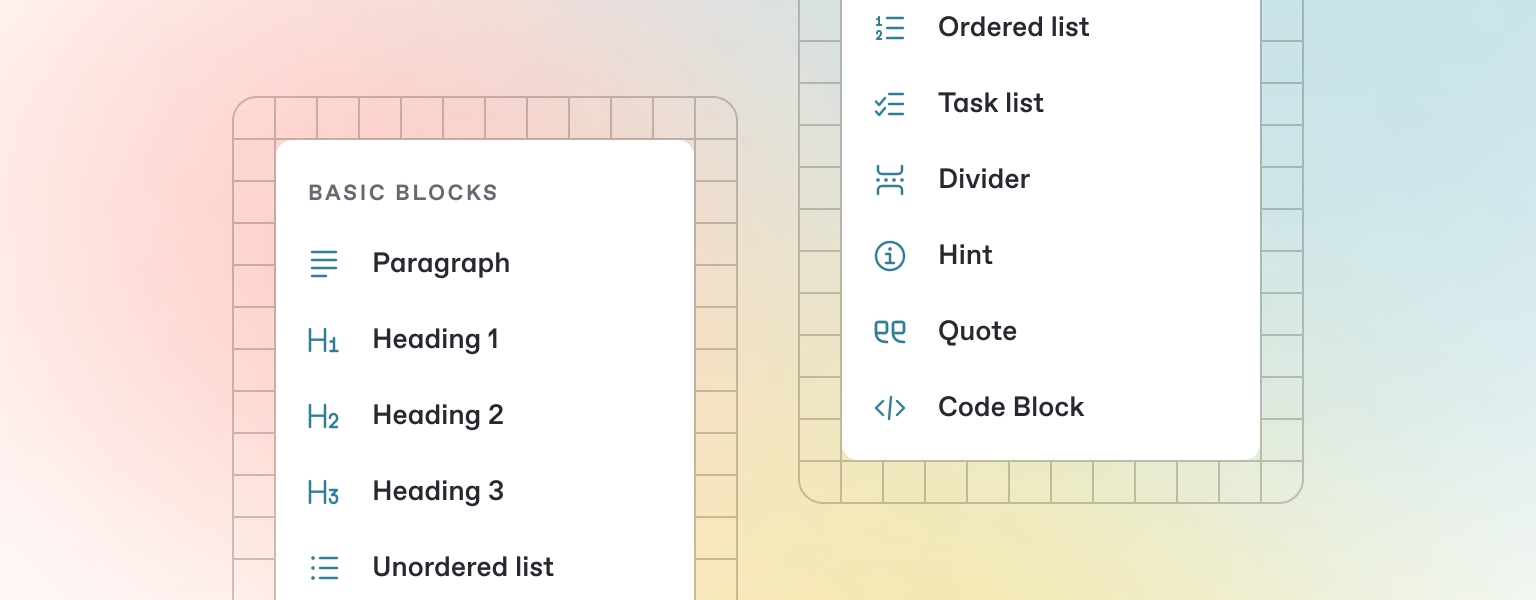

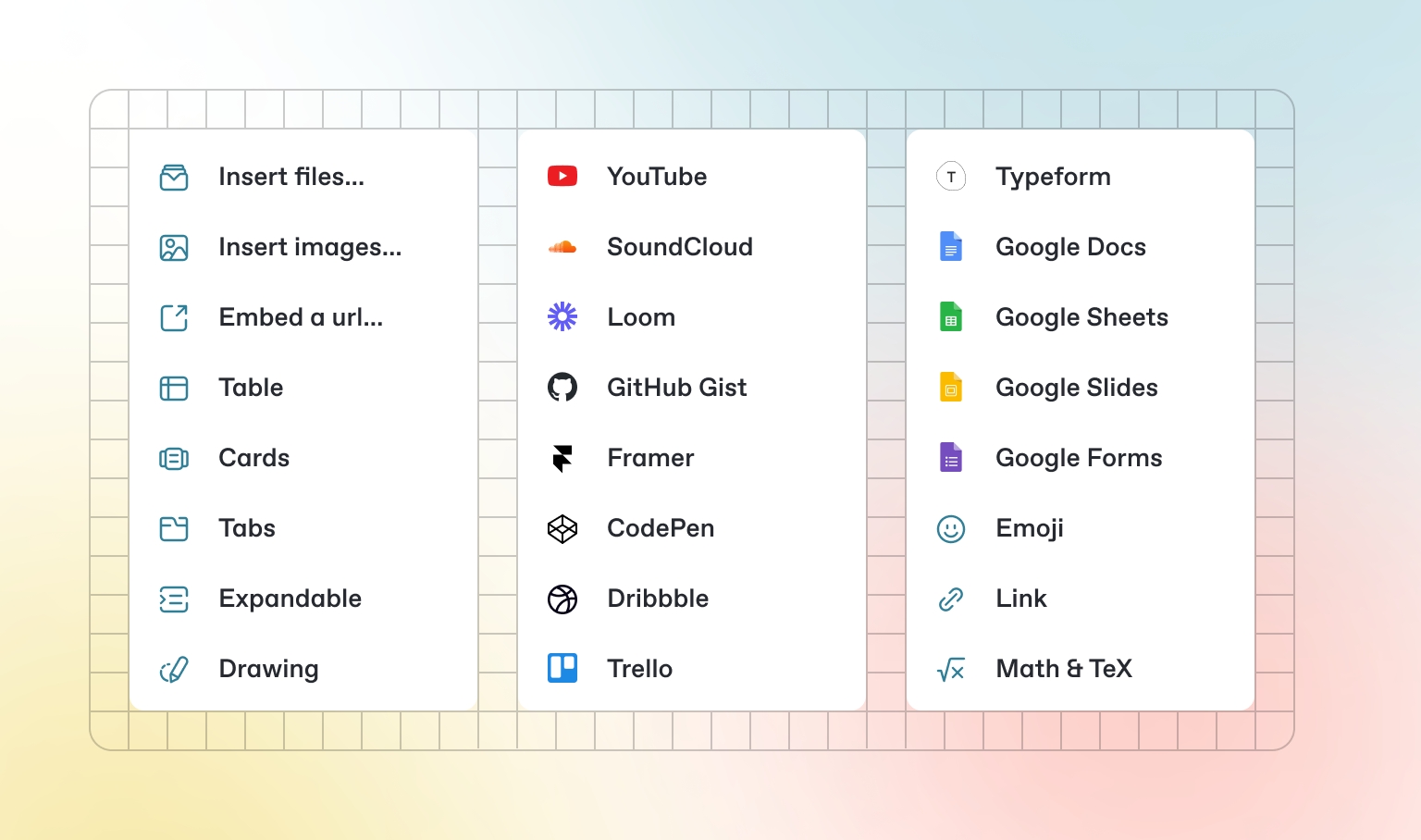

GitBook has a powerful block-based editor that allows you to seamlessly create, update, and enhance your content.

GitBook offers a range of block types for you to add to your content inline — from simple text and tables, to code blocks and more. These elements will make your pages more useful to readers, and offer extra information and context.

Either start typing below, or press / to see a list of the blocks you can insert into your page.

GitBook supports many different types of content, and is backed by Markdown — meaning you can copy and paste any existing Markdown files directly into the editor!

Feel free to test it out and copy the Markdown below by hovering over the code block in the upper right, and pasting into a new line underneath.

Thank you for building with bitca. If you need help, please come chat with us on discord or post your questions on the community forum.

GitBook allows you to add images and media easily to your docs. Simply drag a file into the editor, or use the file manager in the upper right corner to upload multiple images at once.

from bitca.human import Human

from bitca.model.openai import OpenAIChat

from bitca.storage.human.sqlite import SqlhumanStorage

from bitca.tools.duckduckgo import DuckDuckGo

from bitca.tools.yfinance import YFinanceTools

from bitca.playground import Playground, serve_playground_app

web_human = Human(

name="Web Human",

model=OpenAIChat(id="gpt-4o"),

tools=[DuckDuckGo()],

instructions=["Always include sources"],

storage=SqlhumanStorage(table_name="web_human", db_file="humans.db"),

add_history_to_messages=True,

markdown=True,

)

finance_human = Human(

name="Finance Human",

model=OpenAIChat(id="gpt-4o"),

tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True, company_news=True)],

instructions=["Use tables to display data"],

storage=SqlhumanStorage(table_name="finance_human", db_file="humans.db"),

add_history_to_messages=True,

markdown=True,

)

app = Ambient(humans=[finance_human, web_human]).get_app()

if __name__ == "__main__":

serve_ambient_app("ambient:app", reload=True)Serve your agents via an API and connect them to your product.

Monitor, evaluate and improve your AI product.

We provide dedicated support and development, book a call to get started. Our prices start at $20k/month and we specialize in taking companies from idea to production within 3 months.

Any python function can be used as a tool by an Agent. We highly recommend creating functions specific to your workflow and adding them to your Agents.

For example, here’s how to use a get_top_hackernews_stories function as a tool:

hn_agent.py

import json

import httpx

from bitca.agent import Agent

def get_top_hackernews_stories(num_stories: int = 10) -> str:

"""Use this function to get top stories from Hacker News.

Args:

num_stories (int): Number of stories to return. Defaults to 10.

Returns:

str: JSON string of top stories.

"""

# Fetch top story IDs

response = httpx.get('https://hacker-news.firebaseio.com/v0/topstories.json')

story_ids = response.json()

# Fetch story details

stories = []

for story_id in story_ids[:num_stories]:

story_response = httpx.get(f'https://hacker-news.firebaseio.com/v0/item/{story_id}.json')

story = story_response.json()

if "text" in story:

story.pop("text", None)

stories.append(story)

return json.dumps(stories)

agent = Agent(tools=[get_top_hackernews_stories], show_tool_calls=True, markdown=True)

agent.print_response("Summarize the top 5 stories on hackernews?", stream=True)Tools are functions that an Agent can run like searching the web, running SQL, sending an email or calling APIs. Use tools integrate Agents with external systems. You can use any python function as a tool or use a pre-built toolkit. The general syntax is:

from bitca.agent import Agent

agent = Agent(

# Add functions or Toolkits

tools=[...],

# Show tool calls in the Agent response

show_tool_calls=True

)Read more about:

setx BITCA_API_KEY bitca-***pip install 'fastapi[standard]' sqlalchemy

python human.py# Heading

This is some paragraph text, with a [link](https://docs.gitbook.com) to our docs.

## Heading 2

- Point 1

- Point 2

- Point 3Bitca comes with built-in monitoring and debugging.

You can set monitoring=True on any agent to log that agent’s sessions or set BITCA_MONITORING=true in your environment to log all agent sessions.

Create a file monitoring.py with the following code:

monitoring.py

from bitca.agent import Agent

agent = Agent(markdown=True, monitoring=True)

agent.print_response("Share a 2 sentence horror story")Authenticate with bitca by running the following command:

bitca author by exporting the BITCA_API_KEY for your workspace from bitcadata.app

Run the agent and view the session on bitca.app/sessions

Bitca also includes a built-in debugger that will show debug logs in the terminal. You can set debug_mode=True on any agent to view debug logs or set BITCA_DEBUG=true in your environment.

debugging.py

Run the agent to view debug logs in the terminal:

Many advanced use-cases will require writing custom Toolkits. Here’s the general flow:

Create a class inheriting the bitca.tools.Toolkit class.

Add your functions to the class.

Important: Register the functions using self.register(function_name)

Now your Toolkit is ready to use with an Agent. For example:

shell_toolkit.py

Crawl4aiTools enable an Agent to perform web crawling and scraping tasks using the Crawl4ai library.

The following example requires the crawl4ai library.

The following agent will scrape the content from the webpage:

cookbook/tools/crawl4ai_tools.py

NewspaperTools enable an Agent to read news articles using the Newspaper4k library.

The following example requires the newspaper3k library.

The following agent will summarize the wikipedia article on language models.

cookbook/tools/newspaper_tools.py

One of our favorite features is using Agents to generate structured data (i.e. a pydantic model). Use this feature to extract features, classify data, produce fake data etc. The best part is that they

Language Models are machine-learning programs that are trained to understand natural language and code. They provide reasoning and planning capabilities to Agents.

Use any model with an Agent like:

bitca supports the following model providers:

pip install -U crawl4aipip install -U newspaper3kexport BITCA_API_KEY=bitca-***python monitoring.pyfrom typing import List

from bitca.tools import Toolkit

from bitca.utils.log import logger

class ShellTools(Toolkit):

def __init__(self):

super().__init__(name="shell_tools")

self.register(self.run_shell_command)

def run_shell_command(self, args: List[str], tail: int = 100) -> str:

"""Runs a shell command and returns the output or error.

Args:

args (List[str]): The command to run as a list of strings.

tail (int): The number of lines to return from the output.

Returns:

str: The output of the command.

"""

import subprocess

logger.info(f"Running shell command: {args}")

try:

logger.info(f"Running shell command: {args}")

result = subprocess.run(args, capture_output=True, text=True)

logger.debug(f"Result: {result}")

logger.debug(f"Return code: {result.returncode}")

if result.returncode != 0:

return f"Error: {result.stderr}"

# return only the last n lines of the output

return "\n".join(result.stdout.split("\n")[-tail:])

except Exception as e:

logger.warning(f"Failed to run shell command: {e}")

return f"Error: {e}"max_length

int

1000

Specifies the maximum length of the text from the webpage to be returned.

web_crawler

Crawls a website using crawl4ai’s WebCrawler. Parameters include ‘url’ for the URL to crawl and an optional ‘max_length’ to limit the length of extracted content. The default value for ‘max_length’ is 1000.

get_article_text

bool

True

Enables the functionality to retrieve the text of an article.

get_article_text

Retrieves the text of an article from a specified URL. Parameters include url for the URL of the article. Returns the text of the article or an error message if the retrieval fails.

roleCreate a Team Leader that can delegate tasks to team-members.

Use your Agent team just like you would use a regular Agent.

from bitca.agent import Agent

from bitca.tools.hackernews import HackerNews

from bitca.tools.duckduckgo import DuckDuckGo

from bitca.tools.newspaper4k import Newspaper4k

hn_researcher = Agent(

name="HackerNews Researcher",

role="Gets top stories from hackernews.",

tools=[HackerNews()],

)

web_searcher = Agent(

name="Web Searcher",

role="Searches the web for information on a topic",

tools=[DuckDuckGo()],

add_datetime_to_instructions=True,

)

article_reader = Agent(

name="Article Reader",

role="Reads articles from URLs.",

tools=[Newspaper4k()],

)

hn_team = Agent(

name="Hackernews Team",

team=[hn_researcher, web_searcher, article_reader],

instructions=[

"First, search hackernews for what the user is asking about.",

"Then, ask the article reader to read the links for the stories to get more information.",

"Important: you must provide the article reader with the links to read.",

"Then, ask the web searcher to search for each story to get more information.",

"Finally, provide a thoughtful and engaging summary.",

],

show_tool_calls=True,

markdown=True,

)

hn_team.print_response("Write an article about the top 2 stories on hackernews", stream=True)from typing import List

from rich.pretty import pprint

from pydantic import BaseModel, Field

from bitca.agent import Agent, RunResponse

from bitca.model.openai import OpenAIChat

class MovieScript(BaseModel):

setting: str = Field(..., description="Provide a nice setting for a blockbuster movie.")

ending: str = Field(..., description="Ending of the movie. If not available, provide a happy ending.")

genre: str = Field(

..., description="Genre of the movie. If not available, select action, thriller or romantic comedy."

)

name: str = Field(..., description="Give a name to this movie")

characters: List[str] = Field(..., description="Name of characters for this movie.")

storyline: str = Field(..., description="3 sentence storyline for the movie. Make it exciting!")

# Agent that uses JSON mode

json_mode_agent = Agent(

model=OpenAIChat(id="gpt-4o"),

description="You write movie scripts.",

response_model=MovieScript,

)

# Agent that uses structured outputs

structured_output_agent = Agent(

model=OpenAIChat(id="gpt-4o-2024-08-06"),

description="You write movie scripts.",

response_model=MovieScript,

structured_outputs=True,

)

# Get the response in a variable

# json_mode_response: RunResponse = json_mode_agent.run("New York")

# pprint(json_mode_response.content)

# structured_output_response: RunResponse = structured_output_agent.run("New York")

# pprint(structured_output_response.content)

json_mode_agent.print_response("New York")

structured_output_agent.print_response("New York")pip install -U bitca openai

python movie_agent.pyOpenAILike also support all the params of OpenAIChat

from os import getenv

from bitca.agent import Agent, RunResponse

from bitca.model.openai.like import OpenAILike

agent = Agent(

model=OpenAILike(

id="mistralai/Mixtral-8x7B-Instruct-v0.1",

api_key=getenv("TOGETHER_API_KEY"),

base_url="https://api.together.xyz/v1",

)

)

# Get the response in a variable

# run: RunResponse = agent.run("Share a 2 sentence horror story.")

# print(run.content)

# Print the response in the terminal

agent.print_response("Share a 2 sentence horror story.")get_top_stories

bool

True

Enables fetching top stories.

get_user_details

bool

True

Enables fetching user details.

get_top_hackernews_stories

Retrieves the top stories from Hacker News. Parameters include num_stories to specify the number of stories to return (default is 10). Returns the top stories in JSON format.

get_user_details

Retrieves the details of a Hacker News user by their username. Parameters include username to specify the user. Returns the user details in JSON format.

cookbook/tools/aws_lambda_tools.py

region_name

str

"us-east-1"

AWS region name where Lambda functions are located.

list_functions

Lists all Lambda functions available in the AWS account.

invoke_function

Invokes a specific Lambda function with an optional payload. Takes function_name and optional payload parameters.

View on Github

api_key

str

None

If you want to manually supply the GIPHY API key.

limit

int

1

The number of GIFs to return in a search.

search_gifs

Searches GIPHY for a GIF based on the query string.

from bitca.agent import Agent

agent = Agent(markdown=True, debug_mode=True)

agent.print_response("Share a 2 sentence horror story")python debugging.pyfrom bitca.agent import Agent

from bitca.tools.crawl4ai_tools import Crawl4aiTools

agent = Agent(tools=[Crawl4aiTools(max_length=None)], show_tool_calls=True)

agent.print_response("Tell me about https://github.com/bitca/bitca.")from bitca.agent import Agent

from bitca.tools.newspaper_tools import NewspaperTools

agent = Agent(tools=[NewspaperTools()])

agent.print_response("Please summarize https://en.wikipedia.org/wiki/Language_model")pip install -U openai duckduckgo-search newspaper4k lxml_html_clean bitca

python hn_team.py# Using JSON mode

MovieScript(

│ setting='The bustling streets of New York City, filled with skyscrapers, secret alleyways, and hidden underground passages.',

│ ending='The protagonist manages to thwart an international conspiracy, clearing his name and winning the love of his life back.',

│ genre='Thriller',

│ name='Shadows in the City',

│ characters=['Alex Monroe', 'Eva Parker', 'Detective Rodriguez', 'Mysterious Mr. Black'],

│ storyline="When Alex Monroe, an ex-CIA operative, is framed for a crime he didn't commit, he must navigate the dangerous streets of New York to clear his name. As he uncovers a labyrinth of deceit involving the city's most notorious crime syndicate, he enlists the help of an old flame, Eva Parker. Together, they race against time to expose the true villain before it's too late."

)

# Use the structured output

MovieScript(

│ setting='In the bustling streets and iconic skyline of New York City.',

│ ending='Isabella and Alex, having narrowly escaped the clutches of the Syndicate, find themselves standing at the top of the Empire State Building. As the glow of the setting sun bathes the city, they share a victorious kiss. Newly emboldened and as an unstoppable duo, they vow to keep NYC safe from any future threats.',

│ genre='Action Thriller',

│ name='The NYC Chronicles',

│ characters=['Isabella Grant', 'Alex Chen', 'Marcus Kane', 'Detective Ellie Monroe', 'Victor Sinclair'],

│ storyline='Isabella Grant, a fearless investigative journalist, uncovers a massive conspiracy involving a powerful syndicate plotting to control New York City. Teaming up with renegade cop Alex Chen, they must race against time to expose the culprits before the city descends into chaos. Dodging danger at every turn, they fight to protect the city they love from imminent destruction.'

)from bitca.agent import Agent

from bitca.model.openai import OpenAIChat

agent = Agent(

model=OpenAIChat(id="gpt-4o"),

description="Share 15 minute healthy recipes.",

markdown=True,

)

agent.print_response("Share a breakfast recipe.", stream=True)from bitca.agent import Agent

from bitca.tools.hackernews import HackerNews

agent = Agent(

name="Hackernews Team",

tools=[HackerNews()],

show_tool_calls=True,

markdown=True,

)

agent.print_response(

"Write an engaging summary of the "

"users with the top 2 stories on hackernews. "

"Please mention the stories as well.",

)pip install openai boto3

from bitca.agent import Agent

from bitca.tools.aws_lambda import AWSLambdaTool

# Create an Agent with the AWSLambdaTool

agent = Agent(

tools=[AWSLambdaTool(region_name="us-east-1")],

name="AWS Lambda Agent",

show_tool_calls=True,

)

# Example 1: List all Lambda functions

agent.print_response("List all Lambda functions in our AWS account", markdown=True)

# Example 2: Invoke a specific Lambda function

agent.print_response("Invoke the 'hello-world' Lambda function with an empty payload", markdown=True)export GIPHY_API_KEY=***from bitca.agent import Agent

from bitca.model.openai import OpenAIChat

from bitca.tools.giphy import GiphyTools

gif_agent = Agent(

name="Gif Generator Agent",

model=OpenAIChat(id="gpt-4o"),

tools=[GiphyTools()],

description="You are an AI agent that can generate gifs using Giphy.",

)

gif_agent.print_response("I want a gif to send to a friend for their birthday.")base_dir

Path

-

Specifies the base directory path for file operations.

save_files

bool

True

Determines whether files should be saved during the operation.

read_files

bool

True

save_file

Saves the contents to a file called file_name and returns the file name if successful.

read_file

Reads the contents of the file file_name and returns the contents if successful.

list_files

Returns a list of files in the base directory

The following agent will use Jina API to summarize the content of https://github.com/phidatahq

cookbook/tools/jinareader_tools.py

api_key

str

-

The API key for authentication purposes, retrieved from the configuration.

base_url

str

-

The base URL of the API, retrieved from the configuration.

search_url

str

-

read_url

Reads the content of a specified URL using Jina Reader API. Parameters include url for the URL to read. Returns the truncated content or an error message if the request fails.

search_query

Performs a web search using Jina Reader API based on a specified query. Parameters include query for the search term. Returns the truncated search results or an error message if the request fails.

Use Nvidia with your Agent:

agent.py

id

str

"nvidia/llama-3.1-nemotron-70b-instruct"

The specific model ID used for generating responses.

name

str

"Nvidia"

The name identifier for the Nvidia agent.

provider

str

-

Nvidia also supports the params of OpenAI.

Use Fireworks with your Agent:

agent.py

id

str

"accounts/fireworks/models/firefunction-v2"

The specific model ID used for generating responses.

name

str

"Fireworks: {id}"

The name identifier for the agent. Defaults to "Fireworks: " followed by the model ID.

provider

str

"Fireworks"

Fireworks also supports the params of OpenAI.

xAI is a platform for providing endpoints for Large Language models.

Set your XAI_API_KEY environment variable. You can get one from xAI here.

MacWindows

Use xAI with your Agent:

agent.py

For more information, please refer to the as well.

xAI also supports the params of .

FalTools enable an Agent to perform media generation tasks.

The following example requires the fal_client library and an API key which can be obtained from Fal.

The following agent will use FAL to generate any video requested by the user.

cookbook/tools/fal_tools.py

BaiduSearch enables an Agent to search the web for information using the Baidu search engine.

The following example requires the baidusearch library. To install BaiduSearch, run the following command:

cookbook/tools/baidusearch_tools.py

View on

JiraTools enable an Agent to perform Jira tasks.

The following example requires the jira library and auth credentials.

The following agent will use Jira API to search for issues in a project.

cookbook/tools/jira_tools.py

ExaTools enable an Agent to search the web using Exa.

The following examples requires the exa-client library and an API key which can be obtained from Exa.

The following agent will run seach exa for AAPL news and print the response.

cookbook/tools/exa_tools.py

DuckDuckGo enables an Agent to search the web for information.

The following example requires the duckduckgo-search library. To install DuckDuckGo, run the following command:

cookbook/tools/duckduckgo.py

ArxivTools enable an Agent to search for publications on Arxiv.

The following example requires the arxiv and pypdf libraries.

The following agent will run seach arXiv for “language models” and print the response.

cookbook/tools/arxiv_tools.py

View on

Sambanova is a platform for providing endpoints for Large Language models. Note that Sambanova currently does not support function calling.

Set your SAMBANOVA_API_KEY environment variable. Get your key from here.

MacWindows

Use Sambanova with your Agent:

agent.py

Sambanova also supports the params of .

ComposioTools enable an Agent to work with tools like Gmail, Salesforce, Github, etc.

The following example requires the composio-phidata library.

The following agent will use Github Tool from Composio Toolkit to star a repo.

cookbook/tools/composio_tools.py

The following parameters are used when calling the GitHub star repository action:

Composio Toolkit provides 1000+ functions to connect to different software tools. Open this to view the complete list of functions.

EmailTools enable an Agent to send an email to a user. The Agent can send an email to a user with a specific subject and body.

cookbook/tools/email_tools.py

from bitca.agent import Agent

from bitca.tools.file import FileTools

agent = Agent(tools=[FileTools()], show_tool_calls=True)

agent.print_response("What is the most advanced LLM currently? Save the answer to a file.", markdown=True)pip install -U jinafrom bitca.agent import Agent

from bitca.tools.jina_tools import JinaReaderTools

agent = Agent(tools=[JinaReaderTools()])

agent.print_response("Summarize: https://github.com/bitca")export NVIDIA_API_KEY=***from bitca.agent import Agent, RunResponse

from bitca.model.nvidia import Nvidia

agent = Agent(model=Nvidia(), markdown=True)

# Get the response in a variable

# run: RunResponse = agent.run("Share a 2 sentence horror story")

# print(run.content)

# Print the response in the terminal

agent.print_response("Share a 2 sentence horror story")

export FIREWORKS_API_KEY=***from bitca.agent import Agent, RunResponse

from bitca.model.fireworks import Fireworks

agent = Agent(

model=Fireworks(id="accounts/fireworks/models/firefunction-v2"),

markdown=True

)

# Get the response in a variable

# run: RunResponse = agent.run("Share a 2 sentence horror story.")

# print(run.content)

# Print the response in the terminal

agent.print_response("Share a 2 sentence horror story.")

export DEEPSEEK_API_KEY=***export XAI_API_KEY=sk-***pip install openaiexport OPENAI_API_KEY=****export OPENROUTER_API_KEY=***pip install -U fal_clientexport FAL_KEY=***pip install -U baidusearchpip install -U jiraexport JIRA_SERVER_URL="YOUR_JIRA_SERVER_URL"

export JIRA_USERNAME="YOUR_USERNAME"

export JIRA_API_TOKEN="YOUR_API_TOKEN"pip install -U exa-clientexport EXA_API_KEY=***pip install -U duckduckgo-searchpip install -U arxiv pypdfexport SAMBANOVA_API_KEY=***pip install composio-phidata

composio add github # Login into Githubfrom bitca.agent import Agent

from bitca.tools.email import EmailTools

receiver_email = "<receiver_email>"

sender_email = "<sender_email>"

sender_name = "<sender_name>"

sender_passkey = "<sender_passkey>"

agent = Agent(

tools=[

EmailTools(

receiver_email=receiver_email,

sender_email=sender_email,

sender_name=sender_name,

sender_passkey=sender_passkey,

)

]

)

agent.print_response("send an email to <receiver_email>")Allows reading from files during the operation.

list_files

bool

True

Enables listing of files in the specified directory.

The URL used for search queries, retrieved from the configuration.

max_content_length

int

-

The maximum length of content allowed, retrieved from the configuration.

The provider of the model, combining "Nvidia" with the model ID.

api_key

Optional[str]

-

The API key for authenticating requests to the Nvidia service. Retrieved from the environment variable NVIDIA_API_KEY.

base_url

str

"https://integrate.api.nvidia.com/v1"

The base URL for making API requests to the Nvidia service.

The provider of the model.

api_key

Optional[str]

-

The API key for authenticating requests to the service. Retrieved from the environment variable FIREWORKS_API_KEY.

base_url

str

"https://api.fireworks.ai/inference/v1"

The base URL for making API requests to the Fireworks service.

sender_passkey

str

-

The passkey for the sender’s email.

receiver_email

str

-

The email address of the receiver.

sender_name

str

-

The name of the sender.

sender_email

str

-

email_user

Emails the user with the given subject and body. Currently works with Gmail.

The email address of the sender.

Humans RAG built-in

Structured outputs

Reasoning built-in

Monitoring & Debugging built-in

api_key

Optional[str]

-

The API key used for authenticating requests to the DeepSeek service. Retrieved from the environment variable DEEPSEEK_API_KEY.

base_url

str

"https://api.deepseek.com"

The base URL for making API requests to the DeepSeek service.

id

str

"deepseek-chat"

The specific model ID used for generating responses.

name

str

"DeepSeekChat"

The name identifier for the DeepSeek model.

provider

str

"DeepSeek"

The provider of the model.

api_key

Optional[str]

-

The API key for authenticating requests to the xAI service. Retrieved from the environment variable XAI_API_KEY.

base_url

str

"https://api.xai.xyz/v1"

The base URL for making API requests to the xAI service.

id

str

"grok-beta"

The specific model ID used for generating responses.

name

str

"xAI"

The name identifier for the xAI agent.

provider

str

"xAI"

The provider of the model, combining "xAI" with the model ID.

quality

str

"standard"

Image quality (standard or hd)

style

str

"vivid"

Image style (vivid or natural)

api_key

str

None

The OpenAI API key for authentication

model

str

"dall-e-3"

The DALL-E model to use

n

int

1

Number of images to generate

size

str

"1024x1024"

generate_image

Generates an image based on a text prompt

Image size (256x256, 512x512, 1024x1024, 1792x1024, or 1024x1792)

api_key

Optional[str]

-

The API key for authenticating requests to the OpenRouter service. Retrieved from the environment variable OPENROUTER_API_KEY.

base_url

str

"https://openrouter.ai/api/v1"

The base URL for making API requests to the OpenRouter service.

max_tokens

int

1024

The maximum number of tokens to generate in the response.

id

str

"gpt-4o"

The specific model ID used for generating responses.

name

str

"OpenRouter"

The name identifier for the OpenRouter agent.

provider

str

-

The provider of the model, combining "OpenRouter" with the model ID.

api_key

str

None

API key for authentication purposes.

model

str

None

The model to use for the media generation.

generate_media

Generate either images or videos depending on the user prompt.

image_to_image

Transform an input image based on a text prompt.

proxy

str

-

Specifies a single proxy address as a string to be used for the HTTP requests.

timeout

int

10

Sets the timeout for HTTP requests, in seconds.

fixed_max_results

int

-

Sets a fixed number of maximum results to return. No default is provided, must be specified if used.

fixed_language

str

-

Set the fixed language for the results.

headers

Any

-

baidu_search

Use this function to search Baidu for a query.

Headers to be used in the search request.

token

str

None

The JIRA API token for authentication, retrieved from the environment variable JIRA_TOKEN. Default is None if not set.

server_url

str

""

The URL of the JIRA server, retrieved from the environment variable JIRA_SERVER_URL. Default is an empty string if not set.

username

str

None

The JIRA username for authentication, retrieved from the environment variable JIRA_USERNAME. Default is None if not set.

password

str

None

get_issue

Retrieves issue details from JIRA. Parameters include:

- issue_key: the key of the issue to retrieve

Returns a JSON string containing issue details or an error message.

create_issue

Creates a new issue in JIRA. Parameters include:

- project_key: the project in which to create the issue

- summary: the issue summary

- description: the issue description

- issuetype: the type of issue (default is “Task”)

Returns a JSON string with the new issue’s key and URL or an error message.

search_issues

Searches for issues using a JQL query in JIRA. Parameters include:

- jql_str: the JQL query string

- max_results: the maximum number of results to return (default is 50)

Returns a JSON string containing a list of dictionaries with issue details or an error message.

add_comment

Adds a comment to an issue in JIRA. Parameters include:

- issue_key: the key of the issue

- comment: the comment text

Returns a JSON string indicating success or an error message.

The JIRA password for authentication, retrieved from the environment variable JIRA_PASSWORD. Default is None if not set.

show_results

bool

False

Controls whether to display search results directly.

api_key

str

-

API key for authentication purposes.

search

bool

False

Determines whether to enable search functionality.

search_with_contents

bool

True

search_exa

Searches Exa for a query.

search_exa_with_contents

Searches Exa for a query and returns the contents from the search results.

Indicates whether to include contents in the search results.

headers

Any

-

Accepts any type of header values to be sent with HTTP requests.

proxy

str

-

Specifies a single proxy address as a string to be used for the HTTP requests.

proxies

Any

-

Accepts a dictionary of proxies to be used for HTTP requests.

timeout

int

10

Sets the timeout for HTTP requests, in seconds.

search

bool

True

Enables the use of the duckduckgo_search function to search DuckDuckGo for a query.

news

bool

True

Enables the use of the duckduckgo_news function to fetch the latest news via DuckDuckGo.

fixed_max_results

int

-

duckduckgo_search

Use this function to search DuckDuckGo for a query.

duckduckgo_news

Use this function to get the latest news from DuckDuckGo.

Sets a fixed number of maximum results to return. No default is provided, must be specified if used.

search_arxiv

bool

True

Enables the functionality to search the arXiv database.

read_arxiv_papers

bool

True

Allows reading of arXiv papers directly.

download_dir

Path

-

search_arxiv_and_update_knowledge_base

This function searches arXiv for a topic, adds the results to the knowledge base and returns them.

search_arxiv

Searches arXiv for a query.

Specifies the directory path where downloaded files will be saved.

api_key

Optional[str]

None

The API key for authenticating with Sambanova (defaults to environment variable SAMBANOVA_API_KEY)

base_url

str

"https://api.sambanova.ai/v1"

The base URL for API requests

id

str

"Meta-Llama-3.1-8B-Instruct"

The id of the Sambanova model to use

name

str

"Sambanova"

The name of this chat model instance

provider

str

"Sambanova"

The provider of the model

owner

str

-

The owner of the repository to star.

repo

str

-

The name of the repository to star.

The following agent will use Lumalabs to generate any video requested by the user.

cookbook/tools/lumalabs_tool.py

api_key

str

None

If you want to manually supply the Lumalabs API key.

generate_video

Generate a video from a prompt.

image_to_video

Generate a video from a prompt, a starting image and an ending image.

The following agent will send an email using Resend

cookbook/tools/resend_tools.py

api_key

str

-

API key for authentication purposes.

from_email

str

-

The email address used as the sender in email communications.

send_email

Send an email using the Resend API.

The following agent will search Google for the query: “Whats happening in the USA” and share results.

cookbook/tools/serpapi_tools.py

api_key

str

-

API key for authentication purposes.

search_youtube

bool

False

Enables the functionality to search for content on YouTube.

search_google

This function searches Google for a query.

search_youtube

Searches YouTube for a query.

// pip install -U bitcapython3 -m venv aienv

aienv/scripts/activatepip install -U bitca openai duckduckgo-searchsetx OPENAI_API_KEY sk-***python web_search.pyfrom bitca.human import Humans

from bitca.model.openai import OpenAIChat

from bitca.tools.yfinance import YFinanceTools

finance_human = Human(

name="Finance Agent",

model=OpenAIChat(id="gpt-4o"),

tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True, company_news=True)],

instructions=["Use tables to display data"],

show_tool_calls=True,

markdown=True,

)

finance_human.print_response("Summarize analyst recommendations for NVDA", stream=True)pip install yfinancepython finance_human.pyfrom bitca.human import Human

from bitca.model.openai import OpenAIChat

from bitca.tools.duckduckgo import DuckDuckGo

human= Human(

model=OpenAIChat(id="gpt-4o"),

tools=[DuckDuckGo()],

markdown=True,

)

human.print_response(

"Tell me about this image and give me the latest news about it.",

images=["https://upload.wikimedia.org/wikipedia/commons/b/bf/Krakow_-_Kosciol_Mariacki.jpg"],

stream=True,

)python image_human.pyfrom bitca.human import Human

from bitca.model.openai import OpenAIChat

from bitca.tools.duckduckgo import DuckDuckGo

from bitca.tools.yfinance import YFinanceTools

web_human = Human(

name="Web Human",

role="Search the web for information",

model=OpenAIChat(id="gpt-4o"),

tools=[DuckDuckGo()],

instructions=["Always include sources"],

show_tool_calls=True,

markdown=True,

)

finance_human = Human(

name="Finance Human",

role="Get financial data",

model=OpenAIChat(id="gpt-4o"),

tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True)],

instructions=["Use tables to display data"],

show_tool_calls=True,

markdown=True,

)

human_team = Human(

team=[web_human, finance_human],

instructions=["Always include sources", "Use tables to display data"],

show_tool_calls=True,

markdown=True,

)

human_team.print_response("Summarize analyst recommendations and share the latest news for NVDA", stream=True)python human_team.pyfrom bitca.agent import Agent, RunResponse

from bitca.model.deepseek import DeepSeekChat

agent = Agent(model=DeepSeekChat(), markdown=True)

# Get the response in a variable

# run: RunResponse = agent.run("Share a 2 sentence horror story.")

# print(run.content)

# Print the response in the terminal

agent.print_response("Share a 2 sentence horror story.")

from bitca.agent import Agent, RunResponse

from bitca.model.xai import xAI

agent = Agent(

model=xAI(id="grok-beta"),

markdown=True

)

# Get the response in a variable

# run: RunResponse = agent.run("Share a 2 sentence horror story.")

# print(run.content)

# Print the response in the terminal

agent.print_response("Share a 2 sentence horror story.")

from bitca.agent import Agent

from bitca.tools.dalle import Dalle

# Create an Agent with the DALL-E tool

agent = Agent(tools=[Dalle()], name="DALL-E Image Generator")

# Example 1: Generate a basic image with default settings

agent.print_response("Generate an image of a futuristic city with flying cars and tall skyscrapers", markdown=True)

# Example 2: Generate an image with custom settings

custom_dalle = Dalle(model="dall-e-3", size="1792x1024", quality="hd", style="natural")

agent_custom = Agent(

tools=[custom_dalle],

name="Custom DALL-E Generator",

show_tool_calls=True,

)

agent_custom.print_response("Create a panoramic nature scene showing a peaceful mountain lake at sunset", markdown=True)from bitca.agent import Agent, RunResponse

from bitca.model.openrouter import OpenRouter

agent = Agent(

model=OpenRouter(id="gpt-4o"),

markdown=True

)

# Get the response in a variable

# run: RunResponse = agent.run("Share a 2 sentence horror story.")

# print(run.content)

# Print the response in the terminal

agent.print_response("Share a 2 sentence horror story.")

from bitca.agent import Agent

from bitca.model.openai import OpenAIChat

from bitca.tools.fal_tools import FalTools

fal_agent = Agent(

name="Fal Video Generator Agent",

model=OpenAIChat(id="gpt-4o"),

tools=[FalTools("fal-ai/hunyuan-video")],

description="You are an AI agent that can generate videos using the Fal API.",

instructions=[

"When the user asks you to create a video, use the `generate_media` tool to create the video.",

"Return the URL as raw to the user.",

"Don't convert video URL to markdown or anything else.",

],

markdown=True,

debug_mode=True,

show_tool_calls=True,

)

fal_agent.print_response("Generate video of balloon in the ocean")from bitca.agent import Agent

from bitca.tools.baidusearch import BaiduSearch

agent = Agent(

tools=[BaiduSearch()],

description="You are a search agent that helps users find the most relevant information using Baidu.",

instructions=[

"Given a topic by the user, respond with the 3 most relevant search results about that topic.",

"Search for 5 results and select the top 3 unique items.",

"Search in both English and Chinese.",

],

show_tool_calls=True,

)

agent.print_response("What are the latest advancements in AI?", markdown=True)from bitca.agent import Agent

from bitca.tools.jira_tools import JiraTools

agent = Agent(tools=[JiraTools()])

agent.print_response("Find all issues in project PROJ", markdown=True)from bitca.agent import Agent

from bitca.tools.exa import ExaTools

agent = Agent(tools=[ExaTools(include_domains=["cnbc.com", "reuters.com", "bloomberg.com"])], show_tool_calls=True)

agent.print_response("Search for AAPL news", markdown=True)from bitca.agent import Agent

from bitca.tools.duckduckgo import DuckDuckGo

agent = Agent(tools=[DuckDuckGo()], show_tool_calls=True)

agent.print_response("Whats happening in France?", markdown=True)from bitca.agent import Agent

from bitca.tools.arxiv_toolkit import ArxivToolkit

agent = Agent(tools=[ArxivToolkit()], show_tool_calls=True)

agent.print_response("Search arxiv for 'language models'", markdown=True)from bitca.agent import Agent, RunResponse

from bitca.model.sambanova import Sambanova

agent = Agent(model=Sambanova(), markdown=True)

# Get the response in a variable

# run: RunResponse = agent.run("Share a 2 sentence horror story.")

# print(run.content)

# Print the response in the terminal

agent.print_response("Share a 2 sentence horror story.")

from phi.agent import Agent

from composio_phidata import Action, ComposioToolSet

toolset = ComposioToolSet()

composio_tools = toolset.get_tools(

actions=[Action.GITHUB_STAR_A_REPOSITORY_FOR_THE_AUTHENTICATED_USER]

)

agent = Agent(tools=composio_tools, show_tool_calls=True)

agent.print_response("Can you star phidatahq/phidata repo?")export LUMAAI_API_KEY=***pip install -U lumaaifrom bitca.agent import Agent

from bitca.llm.openai import OpenAIChat

from bitca.tools.lumalab import LumaLabTools

luma_agent = Agent(

name="Luma Video Agent",

llm=OpenAIChat(model="gpt-4o"),

tools=[LumaLabTools()], # Using the LumaLab tool we created

markdown=True,

debug_mode=True,

show_tool_calls=True,

instructions=[

"You are an agent designed to generate videos using the Luma AI API.",

"You can generate videos in two ways:",

"1. Text-to-Video Generation:",

"2. Image-to-Video Generation:",

"Choose the appropriate function based on whether the user provides image URLs or just a text prompt.",

"The video will be displayed in the UI automatically below your response, so you don't need to show the video URL in your response.",

],

system_message=(

"Use generate_video for text-to-video requests and image_to_video for image-based "

"generation. Don't modify default parameters unless specifically requested. "

"Always provide clear feedback about the video generation status."

),

)

luma_agent.run("Generate a video of a car in a sky")pip install -U resendexport RESEND_API_KEY=***from bitca.agent import Agent

from bitca.tools.resend_tools import ResendTools

from_email = "<enter_from_email>"

to_email = "<enter_to_email>"

agent = Agent(tools=[ResendTools(from_email=from_email)], show_tool_calls=True)

agent.print_response(f"Send an email to {to_email} greeting them with hello world")pip install -U google-search-resultsexport SERPAPI_API_KEY=***from bitca.agent import Agent

from bitca.tools.serpapi_tools import SerpApiTools

agent = Agent(tools=[SerpApiTools()])

agent.print_response("Whats happening in the USA?", markdown=True)add

bool

True

Enables the functionality to perform addition.

subtract

bool

True

Enables the functionality to perform subtraction.

multiply

bool

True

add

Adds two numbers and returns the result.

subtract

Subtracts the second number from the first and returns the result.

multiply

Multiplies two numbers and returns the result.

divide

Divides the first number by the second and returns the result. Handles division by zero.

exponentiate

Raises the first number to the power of the second number and returns the result.

factorial

Calculates the factorial of a number and returns the result. Handles negative numbers.

dags_dir

Path or str

Path.cwd()

Directory for DAG files

save_dag

bool

True

Whether to register the save_dag_file function

read_dag

bool

True

Whether to register the read_dag_file function

save_dag_file

Saves python code for an Airflow DAG to a file

read_dag_file

Reads an Airflow DAG file and returns the contents

View on Github

Use Gemini with your Agent:

agent.py

id

str

"gemini-1.5-flash"

The specific Gemini model ID to use.

name

str

"Gemini"

The name of this Gemini model instance.

provider

str

"Google"

The following agent will use Cal.com to list all events in your Cal.com account for tomorrow.

cookbook/tools/calcom_tools.py

api_key

str

None

Cal.com API key

event_type_id

int

None

Event type ID for scheduling

user_timezone

str

None

get_available_slots

Gets available time slots for a given date range

create_booking

Creates a new booking with provided details

get_upcoming_bookings

Gets list of upcoming bookings

get_booking_details

Gets details for a specific booking

reschedule_booking

Reschedules an existing booking

cancel_booking

Cancels an existing booking

The following agent will scrape the content from https://finance.yahoo.com/ and return a summary of the content:

cookbook/tools/firecrawl_tools.py

api_key

str

None

Optional API key for authentication purposes.

formats

List[str]

None

Optional list of formats to be used for the operation.

limit

int

10

scrape_website

Scrapes a website using Firecrawl. Parameters include url to specify the URL to scrape. The function supports optional formats if specified. Returns the results of the scraping in JSON format.

crawl_website

Crawls a website using Firecrawl. Parameters include url to specify the URL to crawl, and an optional limit to define the maximum number of pages to crawl. The function supports optional formats and returns the crawling results in JSON format.

The following agent will use ModelsLabs to generate a video based on a text prompt.

cookbook/tools/models_labs_tools.py

api_key

str

None

The ModelsLab API key for authentication

url

str

"https://modelslab.com/api/v6/video/text2video"

The API endpoint URL

fetch_url

str

https://modelslab.com/api/v6/video/fetch

generate_media

Generates a video or gif based on a text prompt

The following agent will search Google for the latest news about “Mistral AI”:

cookbook/tools/googlesearch_tools.py

fixed_max_results

int

None

Optional fixed maximum number of results to return.

fixed_language

str

None

Optional fixed language for the requests.

headers

Any

None

google_search

Searches Google for a specified query. Parameters include query for the search term, max_results for the maximum number of results (default is 5), and language for the language of the search results (default is “en”). Returns the search results as a JSON formatted string.

The following agent will use Apify to crawl the webpage: https://docs.projectbit.ca/ and summarize it.

cookbook/tools/apify_tools.py

api_key

str

-

API key for authentication purposes.

website_content_crawler

bool

True

Enables the functionality to crawl a website using website-content-crawler actor.

web_scraper

bool

False

website_content_crawler

Crawls a website using Apify’s website-content-crawler actor.

web_scrapper

Scrapes a website using Apify’s web-scraper actor.

View on Github

Use the best in class GPT models using Azure’s OpenAI API.

Set your environment variables.

MacWindows

export AZURE_OPENAI_API_KEY=***

export AZURE_OPENAI_ENDPOINT=***

export AZURE_OPENAI_MODEL_NAME=***

export AZURE_OPENAI_DEPLOYMENT=***

# Optional:

# export AZURE_OPENAI_API_VERSION=***Use AzureOpenAIChat with your Agent:

agent.py

Azure also supports the params of .

Agents use storage to persist sessions by storing them in a database.

Agents come with built-in memory, but it only lasts while the session is active. To continue conversations across sessions, we store agent sessions in a database like PostgreSQL.

The general syntax for adding storage to an Agent looks like:

Example

Run Postgres

Install docker desktop and run Postgres on port 5532 using:

Create an Agent with Storage

Create a file agent_with_storage.py with the following contents

Run the agent

Install libraries

MacWindows

Run the agent

Now the agent continues across sessions. Ask a question:

Then message bye to exit, start the app again and ask:

Start a new run

Run the agent_with_storage.py file with the --new flag to start a new run.

Claude is a family of foundational AI models by Anthropic that can be used in a variety of applications.

Set your ANTHROPIC_API_KEY environment. You can get one from Anthropic here.

MacWindows

Use Claude with your Agent:

agent.py

ertexAI is Google’s cloud platform for building, training, and deploying machine learning models.

Use Gemini with your Agent:

agent.py

Together is a platform for providing endpoints for Large Language models.

Set your TOGETHER_API_KEY environment variable. Get your key from Together here.

MacWindows

Use Together with your Agent:

agent.py

Together also supports the params of .

GithubTools enables an Agent to access Github repositories and perform tasks such as listing open pull requests, issues and more.

The following examples requires the PyGithub library and a Github access token which can be obtained from here.

The following agent will search Google for the latest news about “Mistral AI”:

cookbook/tools/github_tools.py

Newspaper4k enables an Agent to read news articles using the Newspaper4k library.

The following example requires the newspaper4k and lxml_html_clean libraries.

The following agent will summarize the article: .

cookbook/tools/newspaper4k_tools.py

OpenBBTools enable an Agent to provide information about stocks and companies.

cookbook/tools/openbb_tools.py

ReplicateTools enables an Agent to generate media using the Replicate platform.

The following example requires the replicate library. To install the Replicate client, run the following command:

The following agent will use Replicate to generate images or videos requested by the user.

cookbook/tools/replicate_tool.py

SpiderTools is an open source web Scraper & Crawler that returns LLM-ready data. To start using Spider, you need an API key from the Spider dashboard.

The following example requires the spider-client library.

The following agent will run a search query to get the latest news in USA and scrape the first search result. The agent will return the scraped data in markdown format.

cookbook/tools/spider_tools.py

ShellTools enable an Agent to interact with the shell to run commands.

The following agent will run a shell command and show contents of the current directory.

Mention your OS to the agent to make sure it runs the correct command.

cookbook/tools/shell_tools.py

from bitca.agent import Agent

from bitca.tools.shell import ShellTools

agent = Agent(tools=[ShellTools()], show_tool_calls=True)

agent.print_response("Show me the contents of the current directory", markdown=True)View on

PubmedTools enable an Agent to search for Pubmed for articles.

The following agent will search Pubmed for articles related to “ulcerative colitis”.

cookbook/tools/pubmed.py

from bitca.agent import Agent

from bitca.tools.pubmed import PubmedTools

agent = Agent(tools=[PubmedTools()], show_tool_calls=True)

agent.print_response("Tell me about ulcerative colitis.")The following agent will use the sleep tool to pause execution for a given number of seconds.

cookbook/tools/sleep_tools.py

from bitca.agent import Agent

from bitca.tools.sleep import Sleep

# Create an Agent with the Sleep tool

agent = Agent(tools=[Sleep()], name="Sleep Agent")

# Example 1: Sleep for 2 seconds

agent.print_response("Sleep for 2 seconds")

# Example 2: Sleep for a longer duration

agent.print_response("Sleep for 5 seconds")WebsiteTools enable an Agent to parse a website and add its contents to the knowledge base.

The following example requires the beautifulsoup4 library.

The following agent will read the contents of a website and add it to the knowledge base.

cookbook/tools/website_tools.py

In addition to the default Markdown you can write, GitBook has a number of out-of-the-box interactive blocks you can use. You can find interactive blocks by pressing / from within the editor.

Each tab is like a mini page — it can contain multiple other blocks, of any type. So you can add code blocks, images, integration blocks and more to individual tabs in the same tab block.

Add images, embedded content, code blocks, and more.

Agents use tools to take actions and interact with external systems.

Tools are functions that an Agent can run to achieve tasks. For example: searching the web, running SQL, sending an email or calling APIs. You can use any python function as a tool or use a pre-built toolkit. The general syntax is:

Bitca provides many pre-built toolkits that you can add to your Agents. For example, let’s use the DuckDuckGo toolkit to search the web.

from bitca.agent import Agent

from bitca.tools.calculator import Calculator

agent = Agent(

tools=[

Calculator(

add=True,

subtract=True,

multiply=True,

divide=True,

exponentiate=True,

factorial=True,

is_prime=True,

square_root=True,

)

],

show_tool_calls=True,

markdown=True,

)

agent.print_response("What is 10*5 then to the power of 2, do it step by step")from bitca.agent import Agent

from bitca.tools.airflow import AirflowToolkit

agent = Agent(

tools=[AirflowToolkit(dags_dir="dags", save_dag=True, read_dag=True)], show_tool_calls=True, markdown=True

)

dag_content = """

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime, timedelta

default_args = {

'owner': 'airflow',

'depends_on_past': False,

'start_date': datetime(2024, 1, 1),

'email_on_failure': False,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5),

}

# Using 'schedule' instead of deprecated 'schedule_interval'

with DAG(

'example_dag',

default_args=default_args,

description='A simple example DAG',

schedule='@daily', # Changed from schedule_interval

catchup=False

) as dag:

def print_hello():

print("Hello from Airflow!")

return "Hello task completed"

task = PythonOperator(

task_id='hello_task',

python_callable=print_hello,

dag=dag,

)

"""

agent.run(f"Save this DAG file as 'example_dag.py': {dag_content}")

agent.print_response("Read the contents of 'example_dag.py'")export GOOGLE_API_KEY=***

from bitca.agent import Agent, RunResponse

from bitca.model.google import Gemini

agent = Agent(

model=Gemini(id="gemini-1.5-flash"),

markdown=True,

)

# Get the response in a variable

# run: RunResponse = agent.run("Share a 2 sentence horror story.")

# print(run.content)

# Print the response in the terminal

agent.print_response("Share a 2 sentence horror story.")pip install requests pytzexport CALCOM_API_KEY="your_api_key"

export CALCOM_EVENT_TYPE_ID="your_event_type_id"

agent = Agent(

name="Calendar Assistant",

instructions=[

f"You're scheduing assistant. Today is {datetime.now()}.",

"You can help users by:",

"- Finding available time slots",

"- Creating new bookings",

"- Managing existing bookings (view, reschedule, cancel) ",

"- Getting booking details",

"- IMPORTANT: In case of rescheduling or cancelling booking, call the get_upcoming_bookings function to get the booking uid. check available slots before making a booking for given time",

"Always confirm important details before making bookings or changes.",

],

model=OpenAIChat(id="gpt-4"),

tools=[CalCom(user_timezone="America/New_York")],

show_tool_calls=True,

markdown=True,

)

agent.print_response("What are my bookings for tomorrow?")pip install -U firecrawl-pyexport FIRECRAWL_API_KEY=***from bitca.agent import Agent

from bitca.tools.firecrawl import FirecrawlTools

agent = Agent(tools=[FirecrawlTools(scrape=False, crawl=True)], show_tool_calls=True, markdown=True)

agent.print_response("Summarize this https://finance.yahoo.com/")pip install requestsexport MODELS_LAB_API_KEY=****from bitca.agent import Agent

from bitca.tools.models_labs import ModelsLabs

# Create an Agent with the ModelsLabs tool

agent = Agent(tools=[ModelsLabs()], name="ModelsLabs Agent")

agent.print_response("Generate a video of a beautiful sunset over the ocean", markdown=True)pip install -U googlesearch-python pycountryfrom bitca.agent import Agent

from bitca.tools.googlesearch import GoogleSearch

agent = Agent(

tools=[GoogleSearch()],

description="You are a news agent that helps users find the latest news.",

instructions=[

"Given a topic by the user, respond with 4 latest news items about that topic.",

"Search for 10 news items and select the top 4 unique items.",

"Search in English and in French.",

],

show_tool_calls=True,

debug_mode=True,

)

agent.print_response("Mistral AI", markdown=True)pip install -U apify-clientexport MY_APIFY_TOKEN=***from bitca.agent import Agent

from bitca.tools.apify import ApifyTools

agent = Agent(tools=[ApifyTools()], show_tool_calls=True)

agent.print_response("Tell me about https://docs.bitca.com/introduction", markdown=True)from bitca.agent import Agent

from bitca.model.openai import OpenAIChat

from bitca.tools.duckduckgo import DuckDuckGo

from bitca.storage.agent.postgres import PgAgentStorage

agent = Agent(

model=OpenAIChat(id="gpt-4o"),

storage=PgAgentStorage(table_name="agent_sessions", db_url="postgresql+psycopg://ai:ai@localhost:5532/ai"),

tools=[DuckDuckGo()],

show_tool_calls=True,

add_history_to_messages=True,

)

agent.print_response("How many people live in Canada?")

agent.print_response("What is their national anthem called?")

agent.print_response("Which country are we speaking about?")docker run -d \

-e POSTGRES_DB=ai \

-e POSTGRES_USER=ai \

-e POSTGRES_PASSWORD=ai \

-e PGDATA=/var/lib/postgresql/data/pgdata \

-v pgvolume:/var/lib/postgresql/data \

-p 5532:5432 \

--name pgvector \

bitca/pgvector:16export ANTHROPIC_API_KEY=***export AWS_ACCESS_KEY_ID=***

export AWS_SECRET_ACCESS_KEY=***

export AWS_DEFAULT_REGION=***export TOGETHER_API_KEY=***pip install -U PyGithubexport GITHUB_ACCESS_TOKEN=***export LINEAR_API_KEY="LINEAR_API_KEY"from bitca.agent import Agent, RunResponse

from bitca.model.ollama import Ollama

agent = Agent(

model=Ollama(id="llama3.1"),

markdown=True

)

# Get the response in a variable

# run: RunResponse = agent.run("Share a 2 sentence horror story.")

# print(run.content)

# Print the response in the terminal

agent.print_response("Share a 2 sentence horror story.")pip install -U newspaper4k lxml_html_cleanfrom bitca.agent import Agent

from bitca.tools.openbb_tools import OpenBBTools

agent = Agent(tools=[OpenBBTools()], debug_mode=True, show_tool_calls=True)

# Example usage showing stock analysis

agent.print_response(

"Get me the current stock price and key information for Apple (AAPL)"

)

# Example showing market analysis

agent.print_response(

"What are the top gainers in the market today?"

)

# Example showing economic indicators

agent.print_response(

"Show me the latest GDP growth rate and inflation numbers for the US"

)export REPLICATE_API_TOKEN=***pip install -U replicatepip install -U spider-clientpip install -U beautifulsoup4Enables the functionality to perform multiplication.

divide

bool

True

Enables the functionality to perform division.

exponentiate

bool

False

Enables the functionality to perform exponentiation.

factorial

bool

False

Enables the functionality to calculate the factorial of a number.

is_prime

bool

False

Enables the functionality to check if a number is prime.

square_root

bool

False

Enables the functionality to calculate the square root of a number.

is_prime

Checks if a number is prime and returns the result.

square_root

Calculates the square root of a number and returns the result. Handles negative numbers.

name

str

"AirflowTools"

The name of the tool

The provider of the model.

function_declarations

Optional[List[FunctionDeclaration]]

None

List of function declarations for the model.

generation_config

Optional[Any]

None

Configuration for text generation.

safety_settings

Optional[Any]

None

Safety settings for the model.

generative_model_kwargs

Optional[Dict[str, Any]]

None

Additional keyword arguments for the generative model.

api_key

Optional[str]

None

API key for authentication.

client_params

Optional[Dict[str, Any]]

None

Additional parameters for the client.

client

Optional[GenerativeModel]

None

The underlying generative model client.

User’s timezone (e.g. “America/New_York”)

get_available_slots

bool

True

Enable getting available time slots

create_booking

bool

True

Enable creating new bookings

get_upcoming_bookings

bool

True

Enable getting upcoming bookings

reschedule_booking

bool

True

Enable rescheduling bookings

cancel_booking

bool

True

Enable canceling bookings

Maximum number of items to retrieve. The default value is 10.

scrape

bool

True

Enables the scraping functionality. Default is True.

crawl

bool

False

Enables the crawling functionality. Default is False.

The URL to fetch the video status from

wait_for_completion

bool

False

Whether to wait for the video to be ready

add_to_eta

int

15

Time to add to the ETA to account for the time it takes to fetch the video

max_wait_time

int

60

Maximum time to wait for the video to be ready

file_type

str

"mp4"

The type of file to generate

Optional headers to include in the requests.

proxy

str

None

Optional proxy to be used for the requests.

timeout

int

None

Optional timeout for the requests, in seconds.

Enables the functionality to crawl a website using web_scraper actor.

read_article

bool

True

Enables the functionality to read the full content of an article.

include_summary

bool

False

Specifies whether to include a summary of the article along with the full content.

article_length

int

-

The maximum length of the article or its summary to be processed or returned.

get_stock_price

This function gets the current stock price for a stock symbol or list of symbols.

search_company_symbol

This function searches for the stock symbol of a company.

get_price_targets

This function gets the price targets for a stock symbol or list of symbols.

get_company_news

This function gets the latest news for a stock symbol or list of symbols.

get_company_profile

This function gets the company profile for a stock symbol or list of symbols.

run_shell_command

Runs a shell command and returns the output or error.

email

str

Specifies the email address to use.

max_results

int

None

Optional parameter to specify the maximum number of results to return.

search_pubmed

Searches PubMed for articles based on a specified query. Parameters include query for the search term and max_results for the maximum number of results to return (default is 10). Returns a JSON string containing the search results, including publication date, title, and summary.

name

str

"sleep"

The name of the tool

sleep

Pauses execution for a specified number of seconds

format

Optional[str]

None

The format of the response.

options

Optional[Any]

None

Additional options to pass to the model.

keep_alive

Optional[Union[float, str]]

None

The keep alive time for the model.

request_params

Optional[Dict[str, Any]]

None

Additional parameters to pass to the request.

host

Optional[str]

None

The host to connect to.

timeout

Optional[Any]

None

The timeout for the connection.

client_params

Optional[Dict[str, Any]]

None

Additional parameters to pass to the client.

client

Optional[OllamaClient]

None

A pre-configured instance of the Ollama client.

async_client

Optional[AsyncOllamaClient]

None

A pre-configured instance of the asynchronous Ollama client.

id

str

"llama3.2"

The ID of the model to use.

name

str

"Ollama"

The name of the model.

provider

str

"Ollama llama3.2"

The provider of the model.

api_key

Optional[str]

-

The API key for authenticating requests to the Azure OpenAI service.

api_version

str

"2024-02-01"

The version of the Azure OpenAI API to use.

azure_endpoint

Optional[str]

-

The endpoint URL for the Azure OpenAI service.

azure_deployment

Optional[str]

-

The deployment name or ID in Azure.

base_url

Optional[str]

-

The base URL for making API requests to the Azure OpenAI service.

azure_ad_token

Optional[str]

-

The Azure Active Directory token for authenticating requests.

azure_ad_token_provider

Optional[Any]

-

The provider for obtaining Azure Active Directory tokens.

organization

Optional[str]

-

The organization associated with the API requests.

openai_client

Optional[AzureOpenAIClient]

-

An instance of AzureOpenAIClient provided for making API requests.

id

str

-

The specific model ID used for generating responses. This field is required.

name

str

"AzureOpenAIChat"

The name identifier for the agent.

provider

str

"Azure"

The provider of the model.

storage

Optional[AgentStorage]

None

Storage mechanism for the agent, if applicable.

max_tokens

Optional[int]

1024

Maximum number of tokens to generate in the chat completion

temperature

Optional[float]

None

Controls randomness in the model's output

stop_sequences

Optional[List[str]]

None

A list of strings that the model should stop generating text at

top_p

Optional[float]

None

Controls diversity via nucleus sampling

top_k

Optional[int]

None

Controls diversity via top-k sampling

request_params

Optional[Dict[str, Any]]

None

Additional parameters to include in the request

api_key

Optional[str]

None

The API key for authenticating with Anthropic

client_params

Optional[Dict[str, Any]]

None

Additional parameters for client configuration

client

Optional[AnthropicClient]

None

A pre-configured instance of the Anthropic client

id

str

"claude-3-5-sonnet-20240620"

The id of the Anthropic Claude model to use

name

str

"Claude"

The name of the model

provider

str

"Anthropic"

The provider of the model

max_tokens

Optional[int]

1024

The maximum number of tokens to generate in the response.

temperature

Optional[float]

-

The sampling temperature to use, between 0 and 2. Higher values like 0.8 make the output more random, while lower values like 0.2 make it more focused and deterministic.

stop_sequences

Optional[List[str]]

-

A list of sequences where the API will stop generating further tokens.

top_p

Optional[float]

-

Nucleus sampling parameter. The model considers the results of the tokens with top_p probability mass.

top_k

Optional[int]

-

The number of highest probability vocabulary tokens to keep for top-k-filtering.

request_params

Optional[Dict[str, Any]]

-

Additional parameters to include in the request.

api_key

Optional[str]

-

The API key for authenticating requests to the service.

client_params

Optional[Dict[str, Any]]

-

Additional parameters for client configuration.

client

Optional[AnthropicClient]

-

A pre-configured instance of the Anthropic client.

id

str

"claude-3-5-sonnet-20240620"

The specific model ID used for generating responses.

name

str

"Claude"

The name identifier for the agent.

provider

str

"Anthropic"

The provider of the model.

max_tokens

int

4096

The maximum number of tokens to generate in the response.

temperature

Optional[float]

-

The sampling temperature to use, between 0 and 2. Higher values like 0.8 make the output more random, while lower values like 0.2 make it more focused and deterministic.

top_p

Optional[float]

-

The nucleus sampling parameter. The model considers the results of the tokens with top_p probability mass.

top_k

Optional[int]

-

The number of highest probability vocabulary tokens to keep for top-k-filtering.

stop_sequences

Optional[List[str]]

-

A list of sequences where the API will stop generating further tokens.

anthropic_version

str

"bedrock-2023-05-31"

The version of the Anthropic API to use.

request_params

Optional[Dict[str, Any]]

-

Additional parameters for the request, provided as a dictionary.

client_params

Optional[Dict[str, Any]]

-

Additional client parameters for initializing the AwsBedrock client, provided as a dictionary.

id

str

"anthropic.claude-3-sonnet-20240229-v1:0"

The specific model ID used for generating responses.

name

str

"AwsBedrockAnthropicClaude"

The name identifier for the Claude agent.

provider

str

"AwsBedrock"

The provider of the model.

api_key

Optional[str]

None

The API key to authorize requests to Together. Defaults to environment variable TOGETHER_API_KEY.

base_url

str

"https://api.together.xyz/v1"

The base URL for API requests.

monkey_patch

bool

False

Whether to apply monkey patching.

id

str

"mistralai/Mixtral-8x7B-Instruct-v0.1"

The id of the Together model to use.

name

str

"Together"

The name of this chat model instance.

provider

str

"Together " + id

The provider of the model.

list_repositories

bool

True

Enable listing repositories for a user/organization.

get_repository

bool

True

Enable getting repository details.

list_pull_requests

bool

True

Enable listing pull requests for a repository.

get_pull_request

bool

True

Enable getting pull request details.

get_pull_request_changes

bool

True

Enable getting pull request file changes.

create_issue

bool

True

Enable creating issues in repositories.

create_issue

Creates a new issue in a repository.

access_token

str

None

Github access token for authentication. If not provided, will use GITHUB_ACCESS_TOKEN environment variable.

base_url

str

None

Optional base URL for Github Enterprise installations.

search_repositories

bool

True

search_repositories

Searches Github repositories based on a query.

list_repositories

Lists repositories for a given user or organization.

get_repository

Gets details about a specific repository.

list_pull_requests

Lists pull requests for a repository.

get_pull_request

Gets details about a specific pull request.

get_pull_request_changes

Gets the file changes in a pull request.

Enable searching Github repositories.

update_issue

bool

True

Enable update_issue tool.

get_user_assigned_issues

bool

True